During the 5th-generation fighter's first deployment across the International Date Line, a flight of six F-22s lost all navigation, communications and fuel management systems.

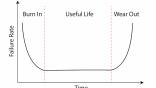

Aircraft reliability is often hard to predict because we are doing things with airplanes we’ve never done before and because the software cannot be fully tested except in actual operational practice. Both factors create exceptions to our ability to anticipate what is going to fail next.

Not too long ago, the realm of flight above 41,000 ft. belonged to the experimental crowd and the military. You rarely considered a flight over 10 hours to be wise. And you would certainly never do that over remote areas without alternates or when flying anything that had less than four engines.

I had certainly ticked all three boxes early in my career, but never all three at the same time until I first flew the Gulfstream GV. That aircraft was also my first experience with a Heated Fuel Return System (HFRS). These systems return a portion of the fuel heated by engine oil coolers, helping to retard the cooling of the fuel caused by extended flight at high altitudes.

We routinely spent 10-to-14 hours at high altitudes where the outside air temperature fell to well below what is considered standard, -56° C. Seeing temperatures below -70° C wasn’t unusual and I once saw -80° C. With the help of the HFRS, the fuel in my tanks never dipped below -33° C. In my experience, the system always worked, and I never worried about fuel freezing because of extended flight at high altitudes.

‘Sticky’ Fuel Limits Thrust

I think my relaxed attitude regarding fuel temperatures was typical back in 2008, an attitude that proved questionable when a Boeing 777 crew flying from Beijing Capital Airport (ZBAA), China, to London Heathrow Airport (EGLL) crashed just short of the runway. Their aircraft dealt with the problem using a water scavenging system to eliminate water in the fuel tanks, reasoning that without water in the fuel, the fuel wouldn’t freeze. Despite water-free fuel, the aircraft lost thrust on both engines while on short final. The crew did well to land 550 m short of the runway, damaging the aircraft beyond repair but sparing the lives of all 149 crew and passengers.

Subsequent investigation revealed possible fuel restrictions in both engine fuel oil heat exchangers. Analysis showed that the fuel didn’t freeze but had become “sticky.” Investigators determined that cold fuel tends to adhere to its surroundings between -5° C and -20° C and is most “sticky” at -12° C. The 777’s fuel was below these levels until it warmed after the aircraft began its descent, and the stickiness was only a factor once the aircraft needed increased thrust on final approach. The Boeing 777, by this time, had been in operational service for 13 years and compiled an enviable reliability record. But fuel stickiness was something we didn’t have a need to understand before the dawn of this kind of long-distance, high-altitude flight.

Another, perhaps more problematic issue to worry about is the complexity of software controlling our aircraft. You may have heard that some daring souls are willing to brave the “beta” version of your favorite software application. These prerelease versions are sent out to users to test in real world conditions, looking for bugs. Some software applications are said to be in “perpetual beta,” meaning they will never really be finished. I think we in the aviation world are doomed to fly perpetual beta releases because our software can never be fully tested except in the real world, because the real world is too complicated to fully predict in a research and development environment.

Consider, for example, the Lockheed Martin F-22 Raptor, which was introduced in the U.S. Air Force in 2005 at a cost of $360 million per copy. It was considered such a technological marvel that it was awarded the prestigious Collier Trophy in 2006. The next year, during its first deployment across the International Date Line from Hawaii to Japan, a flight of a half dozen Raptors lost all navigation, communications and fuel management systems because of what was called a “computer glitch” that seemed to be triggered once crossing the date line. The aircraft were able to visually signal their distress to their air refueling tankers, who escorted them back to Hawaii. The Air Force has never officially explained what caused the glitch, but the fix was instituted within 48 hours.

How can we, as operators, possibly predict the reliability of our aircraft, given their complexity? More importantly, how can we have faith in predicted reliability given to us in terms of failures per thousands of flight hours or sorties?

The Pilot’s Conundrum

Most pilots with more than a few years flying computerized jets will have heard “you need to reboot” from maintenance when reacting to a problem after initial power up. A reboot for many aircraft involves turning everything off and waiting a few minutes, but some may also require some or all aircraft batteries be disconnected.

I was once in this situation with a horizontal stabilizer and after the reboot was assured the aircraft was safe to fly. “Why did it fail before and not after the reboot?” I asked. “These things happen, don’t worry about it.” I refused to fly the aircraft and further investigation revealed the reboot allowed the software to bypass a System Power-On Self-Test (SPOST) of an electrical brake on the stabilizer. I no longer accept “don’t worry about it” when it comes to airplane problems.

I accept that flight itself is not risk free, but I also reject inflated claims of reliability. In the Gulfstream GVII that I fly these days, for example, we are told the fly-by-wire system could degrade from “Normal” to “Alternate” mode if we lose too many air data or inertial reference sources, or if the flight control computers lose communications with the horizontal stabilizer system. We are told the probability of this happening is less than “1 per 10 million flight hours.”

As an engineer, that makes me think of the claims given for the Space Shuttle. As a pilot, I think that even if the 1-per-10 million flight hours is true, it doesn’t matter if that particular one hour happens with me in the seat.

The conundrum we face when it comes to critical system reliability is that we just don’t know for sure and that we must often assume the designers have given us an acceptable safety margin. That is the theory that makes all this work. But what of reality?

In both the “fly-by-cable” T-37 and Airbus A330 fly-by-wire examples, the pilots were presented with situations they had never been trained for and were not addressed in any manuals. They used their systems knowledge to solve their problems. How do we apply these lessons to any aircraft, no matter the complexity?

First, you should do a risk analysis of all systems and identify those that can kill you if they misbehave. I would include any fly-by-wire system that cannot be turned off and controlled manually. You may also want to consider powerplants where the computers have full authority on the shutdown decision. Next, you should realize that you can never know too much about these systems. What you learn in school is just the starting point. Finally, you should be a consumer of all information related to these systems. Reviewing accident case studies not only helps you to learn from the mistakes of others, it also reveals to you the magic trick performed by quick-thinking pilots dealing with aircraft once thought to be as reliable as yours.

Aircraft Reliability: Theory Versus Reality, Part 1:

https://aviationweek.com/business-aviation/safety-ops-regulation/aircra…

Aircraft Reliability: Theory Versus Reality, Part 2:

https://aviationweek.com/business-aviation/safety-ops-regulation/aircra…

Comments

One other comment re the B777 BA accident, for factual accuracy the "stickiness" referred to is actually the ice in the fuel not the fuel itself. The accident pretty much introduced a whole new area of icing physics.