This last part of the article series focuses on communicating. This second part’s theme is navigating and the first part is aviating.

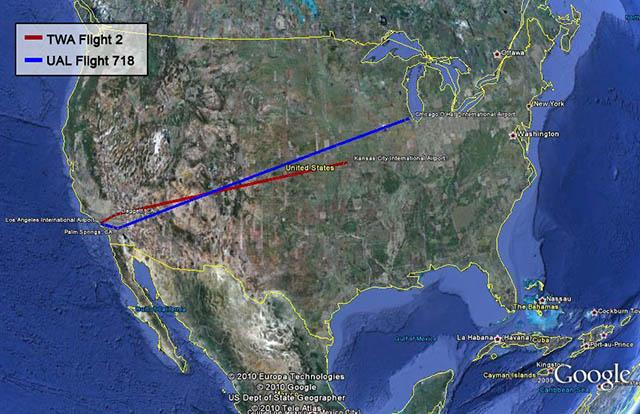

As late as the 1950s, en route air traffic control consisted of pilots maintaining their own separation by looking out the window with no communications between aircraft or with an air traffic controller. This was the “Big Sky Theory” in action: lots of sky, few airplanes. What can go wrong? On June 30, 1956, a United Airlines Douglas DC-7 and a Trans World Airlines Lockheed 1049A collided somewhere over Arizona. The Civil Aeronautics Board determined the official cause was that “the pilots did not see each other in time to avoid the collision.” These days we maintain en route separation through communications, navigation and surveillance, and that often means controller pilot data link communications (CPDLC).

If you started flying before CPDLC, you probably treated the new technology with suspicion. I remember the first time I received a clearance to climb via data link, I was tempted to confirm the instruction via HF radio. “Did you really clear me to climb?” But as the years have gone by, the novelty has worn off and we accept these kinds of clearances without a second thought. However, I should have kept up my skepticism back then; the aircraft I was flying at the time did not have a latency monitor.

We were assured in training that if we were to receive data-link instructions to set our latency timer we could cheerfully say “Latency timer not available” and be on our way. It was an unnecessary piece of kit, and we didn’t have to worry about it.

On Sept. 12, 2017, an Alaskan Airlines flight had a communications management unit (CMU) problem that meant an ATC instruction to climb never made it to the crew. On the next flight, the CMU power was reset and corrected the issue, and the pending message was delivered. The CMU did not recognize the message as being old, so it was presented to the flight crew as a clearance. The crew dutifully climbed 1,000 ft.

An ATC clearance, like “Climb to and maintain FL370,” is obviously meant for “right now” and certainly not for “sometime tomorrow.” The Alaskan Airlines flight had a problem with its Iridium system, which has since been corrected. But a latency timer would have checked to make sure the clearance was issued within the required time frame and would have rejected it.

Having controllers issue instructions to the wrong aircraft or having a pilot accept a clearance meant for someone else is nothing new. But the speed of data-link communications opens entirely new opportunities for this kind of confusion. The fix, once again, relies on a large dose of common sense:

(1) Ensure data-link systems are programmed with the correct flight identifications and that these agree with those in the filed flight plan.

(2) Understand flight manual limitations that degrade data-link systems and do not fly using data-link systems that are no longer qualified as certified.

(3) Apply a “sanity check” to each air traffic clearance and query controllers when a clearance doesn’t make sense. The controller may indeed want you to descend early during your transoceanic crossing, but that would be unusual.

Envelope Protection

I once wrote in my maintenance log, “Cockpit went nuts” because it was the most apt description of what had happened. We were speeding down the runway around 110 kt, just 15 kt short of V1, when every bell and whistle we had started to complain. The crew alerting system was so full of messages it looked like someone hit a test button we didn’t have.

It turned out one of the angle-of-attack (AOA) vanes became misaligned with the wind momentarily but managed to sort itself out by the time we rotated. It became a known issue and the manufacturer eventually replaced all of the faulty AOA vanes in the fleet. In that aircraft, AOA was a tool for the pilots, but it couldn’t fly the aircraft other than push the nose down if we stalled. That was on an aircraft with a hydraulic flight control system where the computers could not overrule the pilots. Things are different in fly-by-wire aircraft.

On June 26, 1988, one of the earliest Airbus A320s crashed in front of an airshow crowd at Basel/Mulhouse-Euro Airport (LFSB), France. The crew misjudged their low pass altitude and the aircraft decided it was landing when the pilots intended a go around. The aircraft didn’t allow the engines to spool up in time to avoid the crash, which killed three of the 136 persons on board. I remember at the time thinking it was nuts that this fly-by-wire aircraft didn’t obey the pilot’s command to increase thrust.

Three decades later I got typed in a fly-by-wire aircraft for the first time, a Gulfstream GVII-G500. I thought the G500’s flight control system was far superior to that on the Airbus, or that of any other aircraft. And that still might be true, but like the early Airbus, the G500 is not above overruling the pilot’s intentions.

In 2020, a GVII-G500 experienced a landing at Teterboro Airport (KTEB), New Jersey, that was hard enough to damage part of the structure. It was a gusty crosswind day, and the pilots did not make and hold the recommended “half the steady and all the gust” additive to their approach speed. (The recommended additives have since been made mandatory on the GVII.) The pilots compounded the problem with rapid and full deflection inputs.

The airplane flight manual, even back then, included a caution against this: “Rapid and large alternating control inputs, especially in combination with large changes in pitch, roll or yaw, and full control inputs in more than one axis at the same time, should be avoided as they may result in structural failures at any speed, including below the maneuvering speed.”

That is good advice for any aircraft, fly-by-wire or not. But it reminds those of us who are flying data-driven aircraft that we not only need to ensure the data input is accurate and that the output is handled correctly, but that we understand the process from input to output.

In each of our aviate, navigate and communicate tasks, it is important to compare the data-driven process to how we would carry out the same tasks manually. If the black boxes are doing something you wouldn’t do, you need to get actively involved. Seeing the aircraft turn on its own 90 deg. from course is an obvious problem that needs fixing. But many of our data problems are subtle or even hidden. You cannot assume the computers know your intent; they must be constantly monitored. The “garbage in” problem is more than a problem of you typing in the wrong data; the garbage can come from aircraft sensors, other aircraft computers, from sources outside the aircraft, and even from the original design.

Even when you are the pilot flying the aircraft, you should always be the pilot monitoring the aircraft systems.